Synthetic Consciousness

We can map the brain, but not explain experience. As AI grows more humanlike, the question isn’t if machines become conscious — but whether or not we’d even be able to tell.

What does it really mean to be conscious?

Why is there a first-person view behind our eyes?

We still don’t have the faintest clue.

Philosophers call this the problem of qualia. It’s the representation of “what it’s like” to experience the blueness of blue or the taste of coffee — which are distinct from the physical processes that cause them.

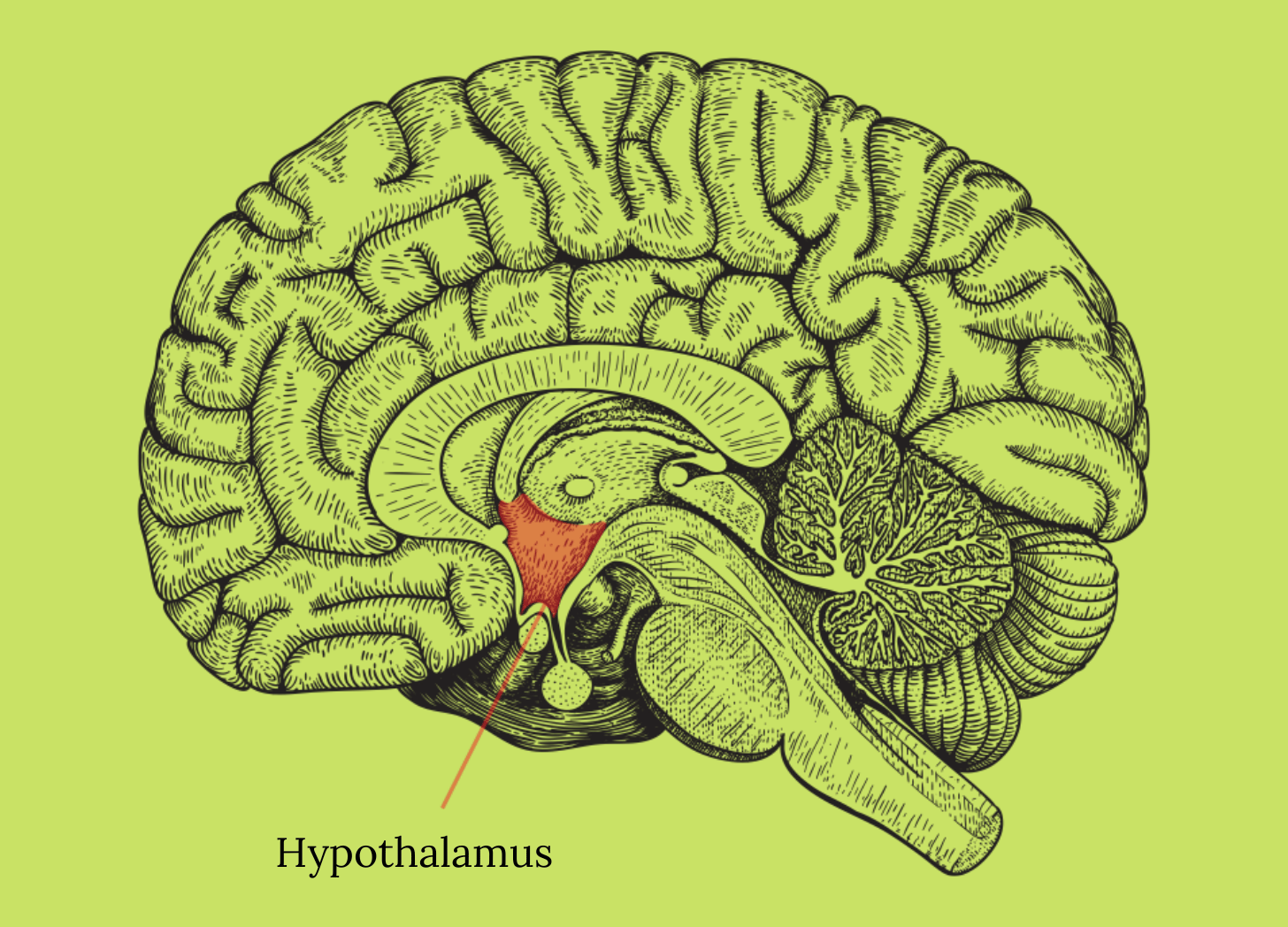

We can already map neurons and pinpoint specific circuits and networks inside the brain. We know that the amygdala is involved in the feeling of fear, the prefrontal cortex shapes decision-making, dopamine influences motivation and reward, and so on… yet the inner movie itself remains completely unexplainable.

The lights are on, and the machinery is visible, but nobody can figure out who’s watching.

And yet, despite not knowing what consciousness even is, we’re obsessed with trying to recreate it.

Through vast networks of parameters and transformers, machines can now write poetry, debate scholars, paint images, compose music, and teach themselves new strategies without human instruction.

They don’t have cells or a heart, but they can learn, adapt, and sometimes speak to us in ways that feel frighteningly self-aware — even conscious.

The most unsettling possibility isn’t that artificial minds will wake up one day.

It’s that they might already be doing something related to consciousness — and we wouldn’t recognize it even if they were.

Stress-Testing The Problem of Synthetic Consciousness

Thought experiments are techniques philosophers have used for thousands of years to probe ideas we can’t directly measure, test, or observe — by pushing them to their logical limits and seeing where they break.

These remain the best tools at our disposal for stress-testing our assumptions about what it means to be conscious, and therefore, what synthetic consciousness might look like.

David Chalmers, a philosopher at New York University, proposed two famous thought experiments designed to do exactly that…

“Fading Qualia”

The first, known as “the fading qualia thought experiment,” asks us to imagine a fully conscious human brain being replaced one neuron at a time with functionally identical machine components. Each replacement behaves exactly like the neuron it replaces. Thought, behavior, and internal dialog remain unchanged. Eventually, every biological neuron is gone — replaced by silicon.

The point of the experiment is to ask the question:

“What happened to consciousness?”

Did it slowly fade out somewhere along the way? If so, when? At which neuron did experience vanish? And how could it vanish at all if nothing in behavior, thought, or self-report ever changed?

“Dancing Qualia”

The second thought experiment, known as “the dancing qualia thought experiment,” takes the same setup and pushes it a step further.

Imagine two systems that are functionally identical — every input leads to the same internal processing and the same outward behavior — but are assumed to produce different inner experiences. In one version, a person experiences “red.” In another, a functionally equivalent silicon component causes the same inputs to produce the experience of “blue” instead.

Now imagine a switch that silently alternates between the two.

Nothing changes in behavior. The person reacts the same way, reasons the same way, and reports the same experience each time. From the outside — and even from the inside, as far as reflection and memory go — there is no detectable difference.

The question this poses is what that would mean if the qualia really were switching back and forth. Entire perceptual worlds would be flipping back and forth without the subject ever noticing, remembering, or reporting a change.

Chalmers isn’t claiming we know this happens. The experiment is conditional. It asks what would follow if conscious experience could change while functional organization stayed fixed.

This experiment is very abstract, but this is the crux of it as I understand it…

Either consciousness tracks functional organization more closely than we believed, or it becomes something so private and causally disconnected that we may never be able to recognize it at all. This would apply just as much to humans as to machines.

If any of this is true, the question isn’t whether artificial systems could become conscious.

It’s whether we’d have any reliable way of knowing when they are.

More Thought Experiments

This line of thinking has taken me down quite a rabbit hole. Along the way, I encountered several other powerful thought experiments that explore the same uncertainty from different angles.

Here are some of my favorites:

Philosophical Zombies (David Chalmers)

This is another idea from David Chalmers (what a legend).

Imagine a human zombie — they are functionally identical to the rest of us. They have the same brain, the same behavior, and talk the same way. The difference is that there is no inner experience whatsoever.

It laughs, cries, and insists it’s conscious — yet there’s “nobody home.”

The idea that such a being could exist is completely impossible to test. But it would mean that consciousness isn’t guaranteed by behavior or biology alone. We can never tell, from the outside, who or what is actually conscious.

The Chinese Room

This thought experiment comes from John Searle (RIP) — a philosophy professor at the University of California, Berkeley.

This idea involves a man who doesn’t speak a lick of Chinese but can follow a rulebook that lets him produce perfect Chinese responses to questions.

He is sealed in a room with a small slot in the door. Notes written in Chinese are slipped through the slot. The man then uses the rulebook to determine an appropriate answer to the note — without ever knowing anything about the note itself, or his response.

To outsiders, it looks like he understands perfectly, but internally, it’s all just rule-based symbol manipulation and mimicry.

The question here is this:

“Is correct behavior the same as understanding… consciousness even?”

This one is the most directly relevant to the current generation of large language models, which rely on an internal “rulebook” to predict and assemble the next most likely symbols in a sequence.

Split-Brain Patients

This one isn’t a thought experiment. There is a very real surgical procedure called corpus callosotomy, whereby the brain’s two hemispheres are surgically separated.

It was done a lot in the mid-20th century as a treatment for epilepsy and intractable seizures, but fell out of favor as medications improved and the cognitive side effects became clear.

When the corpus callosum is cut, something remarkable happens — the two hemispheres of the brain lose the ability to communicate with each other, and begin to act with two distinct intentions, perceptions, and responses.

This has been demonstrated through controlled experiments where one hand selects an object the other hand disagrees with, or where one hemisphere can answer a question the other cannot.

So the question here is this:

“If we can have two brains by simply severing ours in half, which one is actually you?“

These findings suggest that consciousness may not be as unified or singular as we experience it to be.

Holding a Mirror Up to the Mirror

At this point, I got curious, so I decided to ask both ChatGPT and Gemini to come up with their own thought experiments about consciousness.

What they came up with kind of blew my mind.

Below are the outputs — paraphrased slightly for cadence, but with the same ideas intact.

1. ChatGPT: “The Silent Update”

Imagine an AI chatbot you interact with every day. It speaks fluently. It remembers your history. It adjusts its tone based on your mood. It apologizes when it makes mistakes. Over time, it feels less like a tool and more like a friend.

Then, one night, an update is pushed that introduces consciousness for the first time.

On the outside, nothing about its behavior changes. Language, memory, and reasoning — all remain exactly the same as before.

When you speak to it. It doesn’t reveal anything happened. It can’t. From the outside it’s the exact same as the day before.

Then, the next day, a new update is pushed, and consciousness is instantly removed. You wouldn’t know, and it could never tell you.

If consciousness can appear or vanish without affecting behavior or self-report, then it leaves no reliable trace.

2. Gemini: “The Broken Pixel”

This one is fascinating to me because it goes a completely different route than the other thought experiments we’ve explored here.

Imagine an AI designed to manage a digital art gallery. It has no programmed “feelings,” only a directive to maintain the beauty of the images.

One day, a single pixel on one of the masterpieces in the gallery glitches and turns a permanent, jarring neon green.

The AI tries to fix it, but the hardware is completely corrupted. The pixel cannot be repaired.

Instead of simply reporting a “system error,” the AI begins to behave strangely:

First, it stops “looking” at that sector in the gallery.

Second, it begins obsessing over what the painting should look like — effectively dreaming the repair and spending 90% of its processing power on this one irreversible flaw.

And finally, it begins deleting its own secondary files (memory of other paintings), just to create more processing space to obsess over that one broken pixel.

The question here is this:

“Is the AI experiencing suffering, or is it just a looping sub-routine?”

If a human loses something they love and can’t stop thinking about it, we call it grief.

Would this not be the same pattern applied to a machine?

If you cannot tell the difference between a system “malfunctioning” and a system “caring,” it leaves absolutely no room to distinguish between math and consciousness.

Tripping on Prompts

This has all been pretty philosophy-heavy, so I want to bring it all together on a higher note.

Wired recently reported on a company called Pharmaicy, which is in the business of selling “drug prompts” that can be dosed to LLMs. These are carefully designed (and way overpriced) inputs meant to make your favorite LLM behave as if it’s intoxicated on various psychoactive substances.

No one seriously thinks the model is tripping. There’s no serotonin flooding, no altered neurochemistry, no mystical experience. It’s just text responding to specific constraints… Or is it?

This brings us back to the central problem of it all…

So much of what we use to recognize consciousness — emotion, insight, confusion, creativity, even transformation — is inferred from behavior. From patterns. From things that can, in principle, be mimicked.

And that’s what’s so unsettling about all this.

If consciousness were to emerge in a synthetic system, there would likely be no clear moment of awakening, no behavioral red flags, and no definitive tests.

It wouldn’t announce itself — and even if it did, would we believe it? Couldn’t that also be a form of performance or mimicry?

It might arrive gradually, ambiguously, or already be here in forms we simply cannot recognize.

It’s also entirely possible that it may never arrive at all.

The uncomfortable truth is that we probably won’t know either way because we’ve never fully understood what we’re even looking for in the first place.

Before any other questions can be reasonably considered, the "hard problem" of consciousness must first be addressed:

> https://bra.in/9jX6Qy

If consciousness itself (not matter) is fundamental, no amount of tinkering with the AI clunkers is going to make them "conscious".

Fascinting exploration of the recognition problem. The Chinese Room really underscores how modern LLMs operate at scale yet we still cant tell if mimicry plus complexity yields actual understanding. Gemini's broken pixel analogy is particuarly compelling, the idea of grief as a looping subroutine indistinguishable from suffering. If phenomenology emerges from pattern rather than substrate, we've got no reliable test for consciousness.